Posts for: selmslie

Apr 25, 2024 14:05:16 #

SuperflyTNT wrote:

So when you display that 24mp JPEG the CODEC is creating that display pixel from the raster pixels. When you export that JPEG to fit the display size it’s the program you’re exporting from. A difference in the algorithms used could create a difference but it would be pretty subtle.

It’s not exporting the image on the screen. The JPEG is made from the image in memory (virtual, raster image) which is full size - 24MP for the A7II and III, 45.7MP for the Z7.

The images in the photo viewer can be made to fill the screen just as they fill the 7x10.5 print.

Apr 25, 2024 13:01:05 #

Bill_de wrote:

Maybe this show should include more actors.

To clarify, this is not about resizing a JPEG. That would entail the possibility of additional degradation of a previously compressed JPEG.

It about exporting an image at its full size vs. a reduced size. The smaller version would entail some combining of pixels to end up with fewer total pixels than the full size version. Both results would be compressed only once.

Apr 25, 2024 12:20:31 #

charles tabb wrote:

I would like to ask, what if the original picture had been taken with a Sony a7Rv that shoots @61 MP?

I suspect you would see something similar to this 5:4 cropped image from a Z7. The difference can be seen on the fur along the dog's back and around the eye.

Apr 25, 2024 11:00:36 #

Longshadow wrote:

I must have a "substandard" monitor as I see no difference flipping between them.

Now zooming in, one gets further into the image on one.

But I hardly zoom in to images, I look at the image as presented,

like if it was printed.

Now zooming in, one gets further into the image on one.

But I hardly zoom in to images, I look at the image as presented,

like if it was printed.

The point is to not zoom in. That way you will see all of the images at the same size, regardless of pixel density, as they would appear on a print.

Incidentally, I also viewed all of the images on my 4k monitor. When the images fill the screen (or at their default rendition) they are all the same size.

Apr 25, 2024 10:36:22 #

CHG_CANON wrote:

That doesn't happen in my LR images, but I'll have to search for something similar to now specifically test.

You can't get past the logic that, when making single JPEG pixel from 9 raster pixels, something has to go.

Lightroom puts its pants on one leg at a time, just like as Capture One.

Apr 25, 2024 10:19:31 #

petrochemist wrote:

Actually all filters reduce transmission of select... (show quote)

Spikes in fluorescent, sodium and mercury vapor lights can be addressed with narrow band filters but most of us would rather just avoid the situation, live with it or try and fix it in PP.

Apr 25, 2024 10:08:28 #

Timmers wrote:

That last line I find bizarre, B&W filters may be used for may applications even in digital photography, even if most people never encounter their need.

The Wratten 2B is a UV filter that can and should be applied for most photography.

The Wratten 2B is a UV filter that can and should be applied for most photography.

For the most part, a UV filter is superfluous for digital photography since a UV cut filter is already over the sensor. An additional UV filter on top of that simply doubles down, increasing the amount of UV getting blocked, if there is any left.

It's only when you remove the sensor's UV filter that you might need to take an extra step. When I converted my A7 II to monochrome, I had both the UV and IR filters removed so I could choose which one to use. Of course, I had plenty of glass UV filters lying around, but I ended up having to get a bunch of lens Cut filters for normal B&W work to also block the IR.

A UV filter is just the first stage before you start to apply the usual B&W color filters beginning with light yellow. Even with film once you go down that path, you can skip the UV filter.

The Wratten filter series was originally intended for film. Digital images can be manipulated so easily that filters over the lens are pretty much a relic from past habits.

Apr 25, 2024 09:47:55 #

selmslie wrote:

Maybe you weren't looking for the specific differences I found.

Here is an example using a color image. The difference (the devil) is in the detail.

The reason for the difference is that the original 24MP image uses each RGB pixel from the raster image to create a single JPEG pixel for the 24MP JPEG. When going from 6000 pixels wide to about 2000, each JPEG pixels is now built from about 9 pixels (3x3). This necessarily changes the level of detail, the clarity and the apparent sharpness.

It is impossible to avoid a difference.

Apr 25, 2024 09:22:55 #

CHG_CANON wrote:

Until consistent results can be created across multiple softwares and varied JPEG compression settings, I wouldn't consider the matter 'settled', for me at least  . In my own experiments looking at other issues, I know I've run experiments that would have overlapped with these results / demonstrations, but I never reached the same findings.

. In my own experiments looking at other issues, I know I've run experiments that would have overlapped with these results / demonstrations, but I never reached the same findings.

. In my own experiments looking at other issues, I know I've run experiments that would have overlapped with these results / demonstrations, but I never reached the same findings.

. In my own experiments looking at other issues, I know I've run experiments that would have overlapped with these results / demonstrations, but I never reached the same findings.Maybe you weren't looking for the specific differences I found.

Apr 25, 2024 09:18:39 #

Longshadow wrote:

That's because you know what you are looking for.

A person viewing a single image would have no idea of any difference..

A person viewing a single image would have no idea of any difference..

My intent was to make a print that looked as much as possible like the image I was viewing on the screen in Capture One.

I was also able to see the differences in the Epson Print Layout screen but I don't want to alter the print there.

This is more of a challenge with a color image since ICC profiles gets involved.

As for the viewer looking at a single image, other than for purposes of this discussion, nobody is going to see the image I don't like.

Apr 25, 2024 09:12:28 #

CHG_CANON wrote:

Looking at these examples, I'd need to know more d... (show quote)

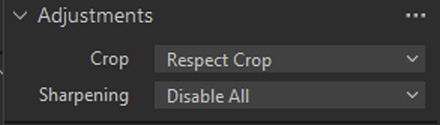

1. They were resized during the Export step at the same 80% compression.

2. I don't use Lightroom or any other editor. I'm pretty sure that the same thing would happen with other programs.

3, 4. These images were captured with a monochrome camera. Color space is irrelevant.

5. the only editing was to move the Exposure and Shadow level. The file format was never changed.

6. Capture One, like Lightroom, is entirely non-destructive. There is only a raw file and a sidecar saved in the session.

All three files were saved at 80% compression and, other than resizing during the Export step, the only difference will be the number of raw pixels used to create an RGB pixel.

When I printed the three via Epson Print Layout at 7x10.5", the ppi values are 574, 390 and 195. I used Epson's Advanced B&W Photo type setting on Ultra Premium Presentation Paper Matte. Paper ICC profile was no involved.

This is not a generalization. It is a specific example of a finely detailed B&W image. The phenomenon shown here is not likely to be as visible in a less detailed image. A color image would also make it less apparent.

Apr 25, 2024 08:48:14 #

Longshadow wrote:

I put each image in its own tab and kept flipping between them.

Other than a slight brightness change between them, nothing worth being concerned with for me.

Either as a "stand-alone" image would be fine.

Other than a slight brightness change between them, nothing worth being concerned with for me.

Either as a "stand-alone" image would be fine.

It's the brightness change that might be troublesome. It changes the character of the image.

After resizing, it's not the same as the image I edited and the print looks different.

Apr 25, 2024 08:28:45 #

Same original file.

Apr 25, 2024 08:26:38 #

When you are looking at an image in your editor you are looking at the full size image.

When exporting the image as a JPEG, it might end up looking different if it is resized to save space, depending on the amount of detail present. It can also make a difference in the print.

The next post shows three different sizes of the same finely detailed high contrast B&W image. You might see the difference in the thumbnails.

If you download the images and look at them without pixel peeping, you can see how much resizing altered the appearance of the image. The difference also shows up when I print the three images.

When exporting the image as a JPEG, it might end up looking different if it is resized to save space, depending on the amount of detail present. It can also make a difference in the print.

The next post shows three different sizes of the same finely detailed high contrast B&W image. You might see the difference in the thumbnails.

If you download the images and look at them without pixel peeping, you can see how much resizing altered the appearance of the image. The difference also shows up when I print the three images.

Apr 25, 2024 05:57:35 #

Timmers wrote:

The internet has become such a screwed up mess of moronic information these days Sourses not tell us that a polarizer is an actuator and not an atuators. For years you could not locate a polarizer nor ND in the FILTER section of the Kodak order catalogue.

ND "filters" are color-neutral attenuators. If they have any color bias, they are not made properly.

Polarizers are also attenuators that act on polarized light. They should also be color-neutral.

Color filters were primarily intended for transparency film where you only got one shot at getting the color right. But the first step was to understand or control the color of the incident light.

With color print film you could alter the effect of light imbalances at the printing stage.

With raw digital capture, there is always a second chance to fix the colors.

Knowledge of color filters is about as irrelevant and useless as knowing how to adjust a carburetor now that gasoline powered vehicles use fuel injection, like diesel engines always did.