Best self-timer mode method

Oct 18, 2018 16:36:35 #

BebuLamar wrote:

And I can still use one of those on my DSLR

Yep, same one. Had no idea they could be used on modern cameras. I'll have to look in

my vintage Minolta system bag. (It's the size of a Smart-car.) I might still have it! >Alan

Oct 18, 2018 16:41:56 #

aellman wrote:

Yep, same one. Had no idea they could be used on modern cameras. I'll have to look in

my vintage Minolta system bag. (It's the size of a Smart-car.) I might still have it! >Alan

my vintage Minolta system bag. (It's the size of a Smart-car.) I might still have it! >Alan

I use it on my Nikon Df and as far as I know it's the only DSLR that can use it. For digital cameras I think the Leica M digital can use it.

Oct 18, 2018 16:44:54 #

BebuLamar wrote:

I use it on my Nikon Df and as far as I know it's the only DSLR that can use it. For digital cameras I think the Leica M digital can use it.

I thought it might be limited. It would still be fun just to find out if I still have it. Then I

could frame it in a shadowbox and hang it on the wall as cutting-edge photographic art.

Oct 18, 2018 16:46:51 #

BebuLamar wrote:

And I can still use one of those on my DSLR

Only if you use a Nikon Df!

(Some of the Fuji XT bodies might use the plunger system too, I am not sure.)

Oct 18, 2018 17:20:53 #

--Bob

MT Shooter wrote:

I personally hate self-timers and never use them. I much prefer to use a wireless remote trigger so I can orchestrate the shot and shoot EXACTLY when I want to.

Oct 18, 2018 17:34:42 #

quenepas wrote:

Dear Hog Colleagues, br br Question on best optio... (show quote)

Just curious: why did you want to shot a 5-picture sequence?

Were you trying to capture some kind of action?

There is such a thing as taking a convenience function, and trying to make

it do too much.

To try to make this dicussion a little more interesting, I'd like say a few words

about the history of self timers. Then I'll try to tie it in with current trends

in digital cameras.

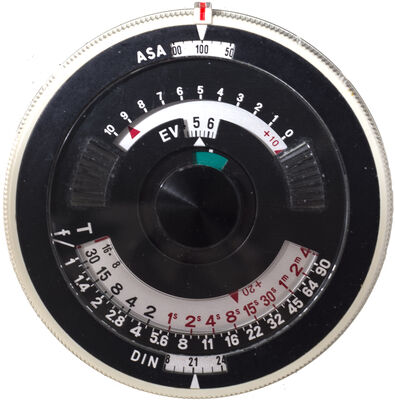

Originally, all self-timers were driven by clockwork. You cocked a lever,

which wound a spring. When you depressed the shutter, it released a

gear train powered by the spring. The gear train was restrained by a

very simple escapement, usually just friction or air paddles. When it

ran down to a certain point, it tripped the shutter mechanism.

The first (?) camera with an elecronic self-timer was the Minolta XG-M

SLR, introduced in 1981. It contained a 555 timer chip (one of the oldest ICs,

and still in production). This is a small, simple, inexpensive analog chip,

which did nothing but run the self-timer.

It worked very well, but there was a wrinkle: the camera had an

electo-mechanical shutter. An electro-magnet held the shutter open.

This meant that long exposures tended to drain the battery. So the

camera was no good for night photography -- but otherwise was

a good camera. I still have one, somewhere, which still works.

The Canon AE-1 was the first microprocessor equipped SLR.

It had limited features, but sold very well. Canon got the cost

down by automating production. They pretty reliable for a

cheap, plastic camera.

When digital cameras became commercially avaiblle in the 1980s,

they all had microprocessors in order to run the LCD display and

menu fuctions. So the microprocessor also ran the self-timer.

So for number of years, cameras using all three implementations--

mechanical, analog IC, and microprocessor -- were being sold

at the same time. None of the delays were very accurate, but

accuracy is not a requirement for self-timers.

Both clockwork time and 555 IC were dedicated to the self-timer

function, and so are very simple. But the microprocessor timer

is just a subroutine called by the main firmware program. So

other camera features (menus, etc.) may not work while the

self-timer is running. Such firmware programs are extremely

large and complex.

Additional additional features to the self-timer, such as the ability

to trigger sequences of photos, makes the firmware even more

complex. And every year, hundreds of new features get added

to digital cameras.

Digital cameras have only been in production for a little over

40 years, but they have already become as extremely complex

embeeded systems. If current trends continue, one wonders

how complex they will be in another 40 years?

Complexity both creates bugs and makes it hard to find bugs

by testing. If you wanted to test ever state that a digital camera

could be in, it would take hundreds if not thousands of years.

(Just think about testing every combination of pixels on the sensor!)

Any state transition that is not tested could be broken--no one knows.

Complexity always seems to "leak" out of the box -- as the original

post illustrates. Self-timers used to do one thing: trip the shutter.

But now there are several shutter modes. Complexity multiplies.

There is no limit to the size of a firmware contorl program except the

amount of ROM (to hold the executable) and RAM in the box.

But log before that limit is reached, cameras will have become too

complicated to implement, test or use.

The public seems to be blissfully unware of the dangers of complexity,

until a fly-by-wire airliner crashes, or a nuclear reactor melts down.

And users have no idea of the internal complexity of the electronic

devices they use everyday. Some (e.g, radios) are pretty simple. Others

particularly computers and embedded systems, are enormously complex.

Prior to introduction of the IBM PC in 1981, there was no such thing as

a home computer. Now your camera is a computer. But computers have

not gotten more reliable since 1981 -- they have gotten much less reliable--

becuause they have gotten vastly more complicated. DOS was almost

bug-free. Every new version of Windows is a bug fest, and many of the

bugs never get fixed.

But at least a computer has a monitor and a keyboard. It may also have

system log files, self-test routines, program debugger software, and even

an OS kernel debugger. At the very least, it will print error messages on

the console.

But an embedded system is a black box. If it's having problems, it's like

a sick cat: you can't ask it where it hurts. Most of the time, you have

no clue as to what's wrong with a sick digital camera, or what to do about

it. The system is too complex, and there is not enough information or

diagnostic tools avialable.

Computer programmers have a saying: "K.I.S.S.: keep it simple, stupid."

But consumers view complexity as a good thing: more "advanced", more

"high tech". More features is always better, right? Wrong. A feature you

do not use can hurt you.

Yes, it is possible to build a talking AI camera. But only an idiot would want one.

By any reasonable standard, todays digital cameras are already way, way too

complex. Ane they grow more complex with each new release.

So do smart phones--but smart phones have much larger development budgets,

since they sell by the millions. So they can afford to be more complex than a

camera can--especially since the market for digital cameras shrunk for almost

ten years, before appearing to bottom out in 2017. There is no revenue to

pay for ever-increasing R&D budgets.

"Something that cannot go on forever, will stop." --Gertrude Stein

The question is: will it stop before digital cameras become completely

unusable, or after?

Oct 18, 2018 19:50:49 #

Oct 19, 2018 01:51:39 #

Bipod wrote:

Just curious: why did you want to shot a 5-pictur... (show quote)

*Wow, I wasn't expecting to read "War and Peace!" Kidding. It's an excellent article. Watch those typos though. I'm a

proofreader so they jump out at me. Not everyone cares, but as a talented writer you might consider reading your material

slowly just before you send it.That's what I do, and you'll be amazed at what you'll find. SEE MY PRIVATE MESSAGE.

Best wishes,

Alan

Oct 19, 2018 09:36:22 #

tfgone wrote:

I use Fuji remote app. You can toitally control the camera from your cell phone, zoom 3etc and control the shutter.

Probably many other cameras have similar remote control apps

Probably many other cameras have similar remote control apps

I occasionally use Canon Connect if I want to use my phone as a viewing screen. But for group shots in which I am part of the group (which I think is the OPs issue), I use a remote. It allows me to keep my hand out of view and my eyes open and visible for the shot.

Oct 19, 2018 09:39:50 #

Bipod wrote:

Just curious: why did you want to shot a 5-pictur... (show quote)

When taking a group shot you take multiple exposures so you can do better PP. As the group gets larger, the likelihood of closed eyes, or odd expressions increases. Multiple shots allows you to swap faces among the shots until you have a nice shot with everyone looking good.

Oct 19, 2018 13:48:57 #

kskarma

Loc: Topeka, KS

You would have problems using one of these "Old School, but Still Cool" bulb releases as they connected to the camera shutter via the 'cable release' screw fitting...(cable release??...another reference to olden days...!!)...I don't know of any current cameras that still have this threaded coupling on the shutter button.

My preference for group shots, especially those with me in them...is to spend some time BEFORE the group is assembled, testing the camera set-up, making sure the tripod is not an anyone's way, looking through the viewfinder to make sure there is room for all of the people...(plus some extra room...just in case..cutting off body parts is illegal in most states!!)..making sure that the focus is in a manual mode...then firing off several test shots from the location where I will be standing in the final shot with my remote release...(I prefer the simplest 'gadget'...an IR remote such as the ML-L3 for Nikons...)..then....and only then, do I issue the call for the group to assemble.

NOTHING is more frustrating for your subjects than to wait and fidget while the photographer fiddles with the camera....ask me how I know...!!

When everyone is in place, I double check through the viewfinder...making sure that everyone is in the frame. I find that telling the group, "Get where you can see the camera....if you can't see it clearly, it can't see you...!" I know this might sound basic, but how many times have we seen a photo with just a sliver of someone's head in the shot...? Maybe they think my camera has "X-Ray" ability?? After I am satisfied with the group arrangement, I take my place and continue to converse with them...small talk, bad jokes, "I've been told you are all Professional Models.." etc...trying to keep everyone smiling...or at least not going to sleep...! When ready for the actual exposure, I tell them to watch the 'count down' light on the camera and that I will take a few shots in quick succession..., I count to three, to allow everyone to get their eyes open and looking pretty....then the shutter "Click"..[Grins]...! Most of the time, I will have the camera set to take 2-5 shots each time, so people should be aware of this series of shots.... If using flash, this won't work unless you have fast recycling studio strobes...but, for available light, it gives a few 'insurance' frames from which to choose.

After this first series, I will take a moment to go to my camera and quickly 'chimp' the results, especially checking focus on someone's eyes in the middle row, just to verify that "Murphy's Law" has not been enforced...! After I am satisfied with what we have taken, I will return to the group and repeat this a few more times...it only takes an additional 30 secs or so....time well spent...

I should also mention, that the more people in the group, the greater the chances that someone will have a 'bad or goofy' expression, be looking at something else in the room...etc...etc... Keep in mind that many photo editing programs make it easy to move portions of one photo to another one...so you can do some Photo Surgery to get great expressions in your final output and make everyone happy....

EDIT....as I post this now, I see that quite a few others have expressed excellent thoughts on the same topic....good to have all of this input in UHH...!

My preference for group shots, especially those with me in them...is to spend some time BEFORE the group is assembled, testing the camera set-up, making sure the tripod is not an anyone's way, looking through the viewfinder to make sure there is room for all of the people...(plus some extra room...just in case..cutting off body parts is illegal in most states!!)..making sure that the focus is in a manual mode...then firing off several test shots from the location where I will be standing in the final shot with my remote release...(I prefer the simplest 'gadget'...an IR remote such as the ML-L3 for Nikons...)..then....and only then, do I issue the call for the group to assemble.

NOTHING is more frustrating for your subjects than to wait and fidget while the photographer fiddles with the camera....ask me how I know...!!

When everyone is in place, I double check through the viewfinder...making sure that everyone is in the frame. I find that telling the group, "Get where you can see the camera....if you can't see it clearly, it can't see you...!" I know this might sound basic, but how many times have we seen a photo with just a sliver of someone's head in the shot...? Maybe they think my camera has "X-Ray" ability?? After I am satisfied with the group arrangement, I take my place and continue to converse with them...small talk, bad jokes, "I've been told you are all Professional Models.." etc...trying to keep everyone smiling...or at least not going to sleep...! When ready for the actual exposure, I tell them to watch the 'count down' light on the camera and that I will take a few shots in quick succession..., I count to three, to allow everyone to get their eyes open and looking pretty....then the shutter "Click"..[Grins]...! Most of the time, I will have the camera set to take 2-5 shots each time, so people should be aware of this series of shots.... If using flash, this won't work unless you have fast recycling studio strobes...but, for available light, it gives a few 'insurance' frames from which to choose.

After this first series, I will take a moment to go to my camera and quickly 'chimp' the results, especially checking focus on someone's eyes in the middle row, just to verify that "Murphy's Law" has not been enforced...! After I am satisfied with what we have taken, I will return to the group and repeat this a few more times...it only takes an additional 30 secs or so....time well spent...

I should also mention, that the more people in the group, the greater the chances that someone will have a 'bad or goofy' expression, be looking at something else in the room...etc...etc... Keep in mind that many photo editing programs make it easy to move portions of one photo to another one...so you can do some Photo Surgery to get great expressions in your final output and make everyone happy....

EDIT....as I post this now, I see that quite a few others have expressed excellent thoughts on the same topic....good to have all of this input in UHH...!

BebuLamar wrote:

And I can still use one of those on my DSLR

Oct 19, 2018 15:46:53 #

dsmeltz wrote:

When taking a group shot you take multiple exposures so you can do better PP. As the group gets larger, the likelihood of closed eyes, or odd expressions increases. Multiple shots allows you to swap faces among the shots until you have a nice shot with everyone looking good.

Great point. Thanks!

If you want to reply, then register here. Registration is free and your account is created instantly, so you can post right away.