Posts for: bclaff

Dec 31, 2022 13:19:33 #

selmslie wrote:

You might find this target helpful since it exposes red, green, and blue more evenly..... It's the white display of an empty folder on a computer screen...

To avoid any issues with the screen's pixels the lens is focused manually at infinity and held close to the screen.

To avoid any issues with the screen's pixels the lens is focused manually at infinity and held close to the screen.

Dec 31, 2022 13:12:31 #

selmslie wrote:

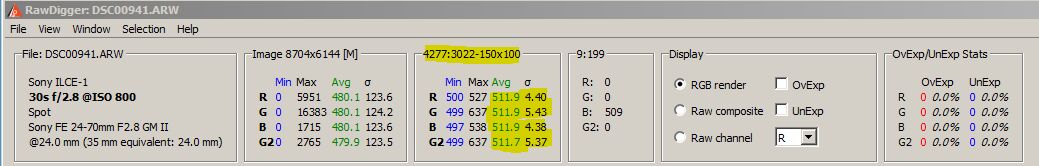

Very strange. By my calculation this is only 1/3 stop brighter but the values are much higher. Your SNR should be much higher for a bright target.It's actually a very bright target...

Dec 31, 2022 11:55:04 #

Since Signal is going to be linear Signal to Noise Ratio (SNR) is just a proxy for noise.

Your SNR values are quite low. This must be a very dark target.

You should expect 14-bit and 12-bit to match until you get down to a read noise that 12-bit can't measure.

You can get an idea of what ISO settings, if any, would be limited by 12-bit by looking at the PhotonsToPhotos Read Noise in DNs chart. If the 14-bit read noise is greater than about 4 (2 on the logarithmic y-axis) then 12-bit will be limited.

Your SNR values are quite low. This must be a very dark target.

You should expect 14-bit and 12-bit to match until you get down to a read noise that 12-bit can't measure.

You can get an idea of what ISO settings, if any, would be limited by 12-bit by looking at the PhotonsToPhotos Read Noise in DNs chart. If the 14-bit read noise is greater than about 4 (2 on the logarithmic y-axis) then 12-bit will be limited.

Dec 31, 2022 11:48:18 #

selmslie wrote:

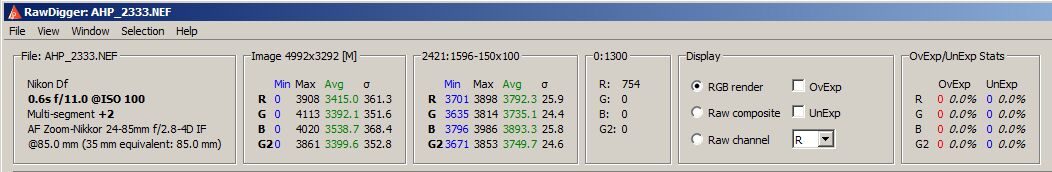

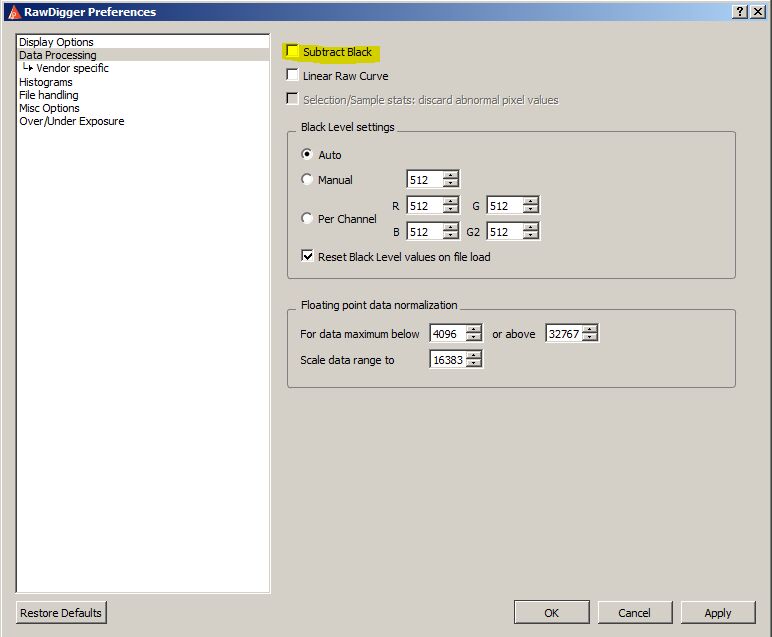

There's no need for the raw values since RawDigger computes the mean and standard deviation of the selection for you. Of course you need to subtract BlackLevel from the mean assuming you have Subtract Black turned off. This is an ILCE-1 30sec black frame....I was just getting the raw values....

Dec 31, 2022 03:34:29 #

selmslie wrote:

When I was testing linearity I noticed that some o... (show quote)

I suggest that you take metering entirely out of the equation and simply use exposure time to vary the amount of light collected. Depending on the camera there are caveats.

Dec 31, 2022 03:30:57 #

selmslie wrote:

FWIW, my RawDigger doesn't have a default selection. Perhaps yours is persisted from an earlier session. But you can always use Selection/set Selection by NumbersI should have mentioned that the raw values I have been recording were taken from RawDigger's default spot 150x100 pixels selection at the center of the frame.

selmslie wrote:

You're implicitly referring to the Signal to Noise Ration (SNR). And yes, the lower the SNR the more apparent noise. PhotonsToPhotos Photographic Dynamic Range uses a minimum SNR in addition to the Circle of Confusion. That's an important point I omitted earlier.(COC)... noise becomes obvious when the standard deviation gets close to the average value.

selmslie wrote:

It's of no practical consequence but you ought to be consistent in your methodology. Remember that when you combine noise it should be sqrt((g1^2 + g2^2) / 2) not (g1 + q2) / 2To save time I only took the values from the G1 channel until the change in log(2) of the raw values started to deviate by more than about 1 stop. Once that happened I took both the G1 and G2 values and averaged them as you can see in the spreadsheet.

Dec 31, 2022 03:16:59 #

selmslie wrote:

Test by all means but you are going to find that all digital camera pixels are quite linear with respect to how much light is collected. This is really a basic premise of the technology. Pixel response is non-linear as the pixel saturates but often the gain before the Analog to Digital Converter (ADC) is set in such as way to only use the linear portion of the pixel response. At the low end read noise will interfere with your observing the linearity of the pixel. To see why you might want to look at some Photons Transfer Curves (PTCs) at PhotonsToPhotos.I will run the same test with the Z7 at its lower base ISO 64 since it also offers both a 14- and 12-bit raw capture.

I no longer have the D610 but I will see if I can find a 12- to 14-bit comparison when I checked its linearity.

My A7 II no longer has its Bayer array and it only captures 14-bit raw but I will also run the test to see where the linearity starts to fail.

I no longer have the D610 but I will see if I can find a 12- to 14-bit comparison when I checked its linearity.

My A7 II no longer has its Bayer array and it only captures 14-bit raw but I will also run the test to see where the linearity starts to fail.

Dec 31, 2022 03:07:59 #

selmslie wrote:

That's due to noise, so you have not in fact made a test that is "independent of noise".... I have been thinking of a different manifestation of DR that is independent of noise. ...

if you examine the numbers in the attached spreadsheet you will see that after Ev-5 the both sets of raw values get a bit squirrely.

if you examine the numbers in the attached spreadsheet you will see that after Ev-5 the both sets of raw values get a bit squirrely.

selmslie wrote:

If I understand your protocol you're implicitly at middle gray (18%) so you need to add about 2.5 stops to your estimate. 9 + 2.5 = 11.5 which is getting in the neighborhood of the 12.9 stops of EDR I measure at 14-bits for the Nikon Df. And yes, 12-bits would produce a lower value probably 12 or 12.5 at best.... By that crude measure the Df seems to have a reliable Df of about 9 stops for the 14-bit raw file but only about 7 stops for the 12-bit raw file.

Dec 31, 2022 02:49:47 #

Strodav wrote:

The demosaicing process does consult neighboring pixels in a sophisticated way, but this doesn't change the underlying dynamic range....I started thinking of the makeup of the Bayer filter with 2x green samples compared to red and blue and that a relatively sophisticated algorithm is used to convert RGGB, and maybe some other surrounding samples, into a single output pixel. They are at least using 14 bits of red, 14 bits of blue and two 14 bits samples of green. So I'm thinking a bit more than 2^14 DR ...

Consider ultra simple demosaicing that combines the 4 pixels of a typical Color Filter Array (CFA).

Mathematically you would have 4x the signal (saturation) and 2x the noise (noise floor) so you might naively think you've got 2x (4x / 2x) more dynamic range. But you also increased the area of your "pixel" 4x! The combination has no effect on the dynamic range per unit area. The dynamic range of the underlying sensor pixel is not increased.

This is also the reason the pixel size has no effect on something like PhotonsToPhotos Photographic Dynamic Range or DxOMark Dynamic Range score ("print" dynamic range).

Dec 31, 2022 02:31:50 #

PHRubin wrote:

Actually, FWIW, for sensors that implement the ISO setting using variable gain between the pixel and the Analog to Digital Converter (ADC), which is almost all our cameras, raising the ISO setting lowers noise and drops the saturation level....Raising the ISO setting raises noise levels and drops the saturation level, reducing dynamic range capability.

This is why you lose less than 1 stop of dynamic range when you raise the ISO setting 1 stop.

(Dual conversion gain and noise reduction aside.)

The more "ISO invariant" the sensor the less obvious this effect.

This is what PhotonsToPhotos Photographic Dynamic Range Shadow Improvement conveys.

It's also the reason that Input-referred Read Noise drops as you raise the ISO setting.

And the reason that astro-photographers shoot at higher ISO settings.

Dec 30, 2022 11:18:08 #

TriX wrote:

...

I admit that I am still struggling with the idea that the resolution or DR of an ideal 14 bit A/D is 14.5 bits. In fact, with all the A/Ds I’m familiar with, we usually consider it to be n-.5 because of the uncertainty of the LSB, and I cannot find a reference where the resolution is ideally anything other than n. ... Can you comment and help me understand or provide a reference please?

I admit that I am still struggling with the idea that the resolution or DR of an ideal 14 bit A/D is 14.5 bits. In fact, with all the A/Ds I’m familiar with, we usually consider it to be n-.5 because of the uncertainty of the LSB, and I cannot find a reference where the resolution is ideally anything other than n. ... Can you comment and help me understand or provide a reference please?

Here's what I wrote at PhotonsToPhotos Quantization Error in Practice

Jack Hogan came to the same conclusion using a different approach.

We make no assumption about LSB imprecision.

Remember, we're not talking about 1 sample but the standard deviation from many samples.

Dec 29, 2022 20:10:41 #

selmslie wrote:

Where did that data come from?

I have a protocol for gathering raw data files that I feed into my analysis software.

I personally gather most of the data but many people have also delivered data sets to me for analysis.

If anyone has access to a camera that is not represented at PhotonsToPhotos they would email or PM for details.

Dec 29, 2022 20:08:19 #

selmslie wrote:

.... The DR of the sensor (before the A/D converter) does not change.

But if the resulting raw file is 12-bit, it can only hold 12 stops of data. A 14-bit raw file can hold 14 stops.

...

But if the resulting raw file is 12-bit, it can only hold 12 stops of data. A 14-bit raw file can hold 14 stops.

...

If the (E)DR of the pixel is less than or equal to 12.5 EV (stops) it doesn't matter if a 14-bit file can hold more dynamic range.

Dec 29, 2022 20:04:30 #

TriX wrote:

I’ve asked the owner of the Photons to Photos site and an expert on the subject (Bill Claff) to comment - I hope he will. Lots of assumptions here…

I'll try to stick to the necessary facts and to present them in a logical order.

Dynamic range is the ratio of a high value divided by a low value expressed as a logarithm.

In engineering it's log10 and the typical unit is the decibel dB.

In photography it's log2 which I usually call an EV (Exposure Value). People also say "stops".

For sensors we use the clipping value as the high value although because of the nature of logarithms it's not so critical to get the exact value.

For example there's no meaningful difference between log2 of 16300 and log2 of 16000.

For the low value we use what is called the noise floor.

At the pixel level this is read noise. Read noise is determined statistically, it's a standard deviation.

I call pixel level dynamic range Engineering Dynamic Range (EDR), DxOMark calls it "screen".

When we measure read noise we do it from raw data that is recorded in Digital Number (DNs) also known as Analog to Digital Units (ADUs).

Because an Anaglog to Digital Converter (ADC) changes the analog voltage into an integer it is subject to something called qualization error.

Mathematically quantization error limits the smallest read noise (and therefore highest dynamic range) that can be measured.

An n-bit ADC is limited to about n.5 EV of dynamic range, eg. a 14-bit ADC is limited to 14.5 (not 14) EV (stops).

Once the ADC has sufficient bit depth to resolve read noise additional bits will not result in more dynamic range.

At PhotonsToPhotos I use the standard Circle of Confusion (COC) to create a virtual pixel that has the area of the COC.

This is used for the noise floor for Photographic Dynamic Range (PDR distinct from EDR).

This measure is resolution independent and I do not resample to some set resolution.

At DxOMark they simply take their "screen" read noise and normalize for an 8MP image.

They call this "print" as opposed to "screen".

Their approach is inferior to that at PhotonsToPhotos because underneath it makes an assumption that something called the Photon Transfer Curve (PTC) is a straight line (at least at the low end) and it is not.

This can lead to clearly wrong results.

You can read more about these topics and see data like PTCs at PhotonsToPhotos.

Dec 29, 2022 18:07:04 #

TriX wrote:

I’ve asked the owner of the Photons to Photos site and an expert on the subject (Bill Claff) to comment - I hope he will. Lots of assumptions here…

I have made some quick comments. A more comprehensive reply will happen after dinner

A lot of this is covered at PhotonsToPhotos.

You can start by following the Further Reading links under the Photographic Dynamic Range Chart

(1st link on the main page)

But I suspect most will wait for my "executive summary."